sandeep

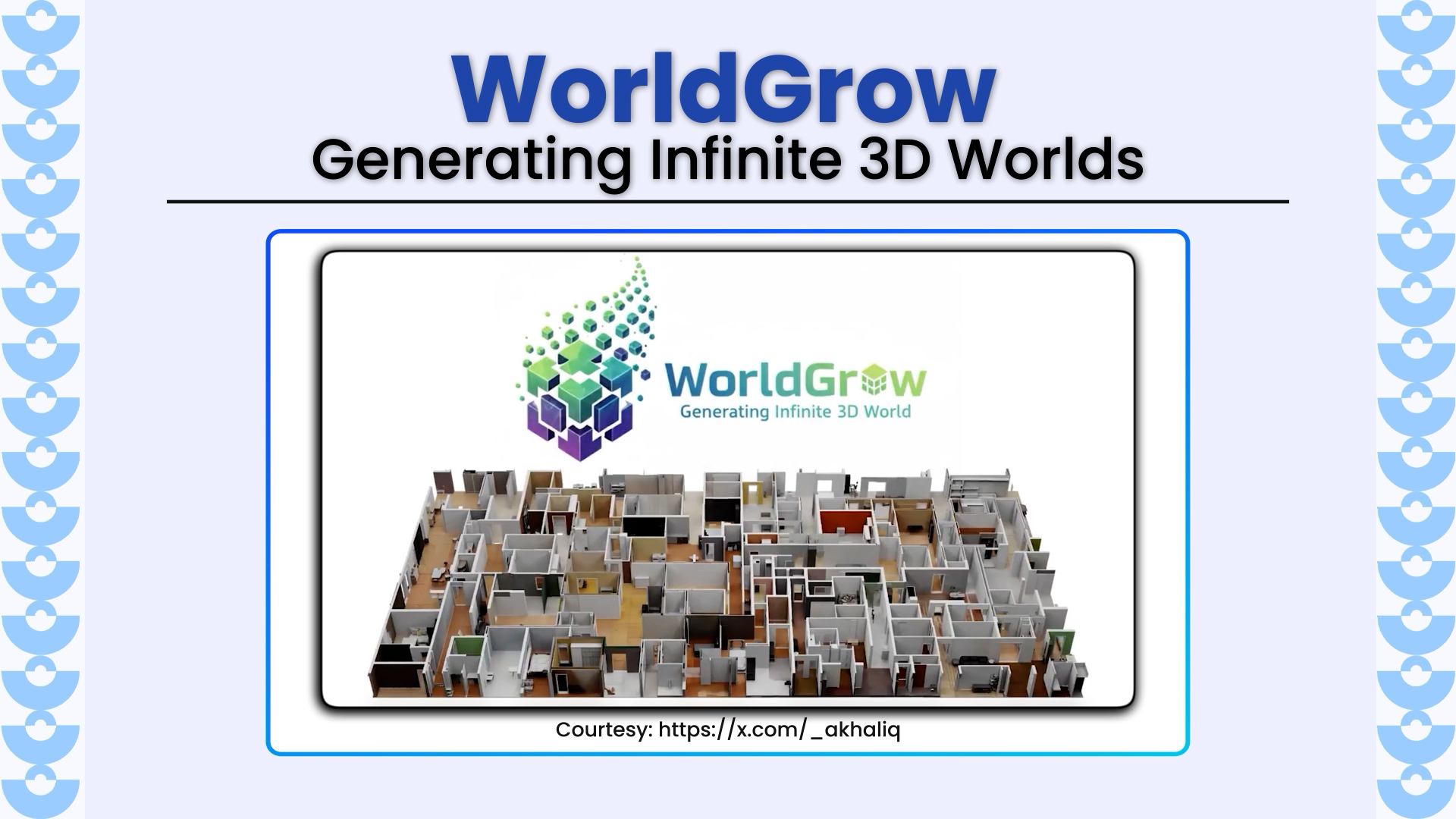

WorldGrow redefines 3D world generation by enabling infinite, continuous 3D scene creation through a hierarchical block-wise synthesis and inpainting pipeline. Developed by researchers from Shanghai Jiao Tong University, Huawei Inc.,

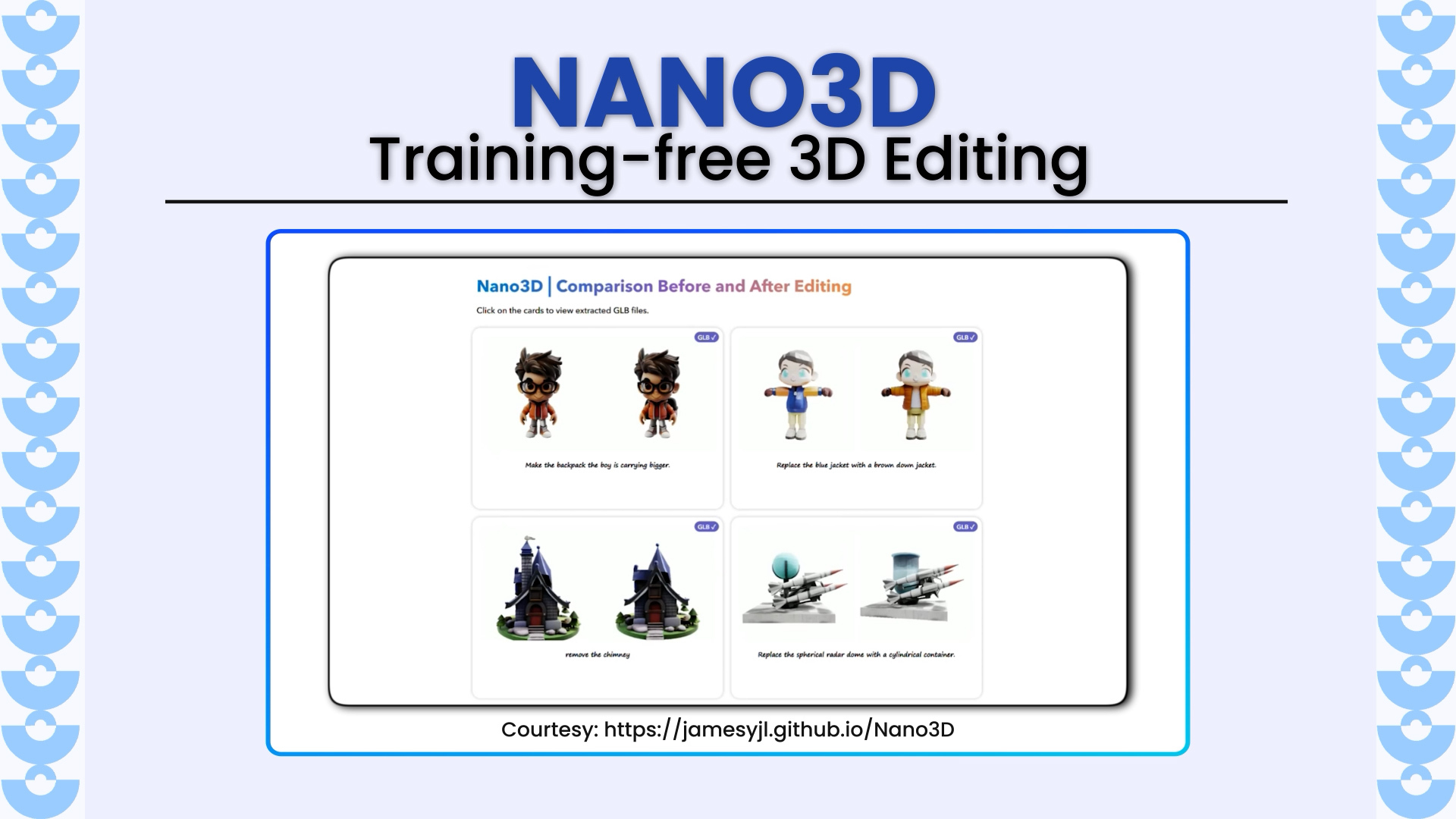

Nano3D revolutionizes 3D asset editing by enabling training-free, part-level shape modifications like removal, addition, and replacement without any manual masks. Developed by researchers from Tsinghua University, Peking University, HKUST, CASIA,

Triangle Splatting+ redefines 3D scene reconstruction and rendering by directly optimizing opaque triangles, the fundamental primitive of computer graphic, in a fully differentiable framework. Unlike Gaussian Splatting or NeRF-based approaches,

Code2Video introduces a revolutionary framework for generating professional educational videos directly from executable Python code. Unlike pixel-based diffusion or text-to-video models, Code2Video treats code as the core generative medium, enabling

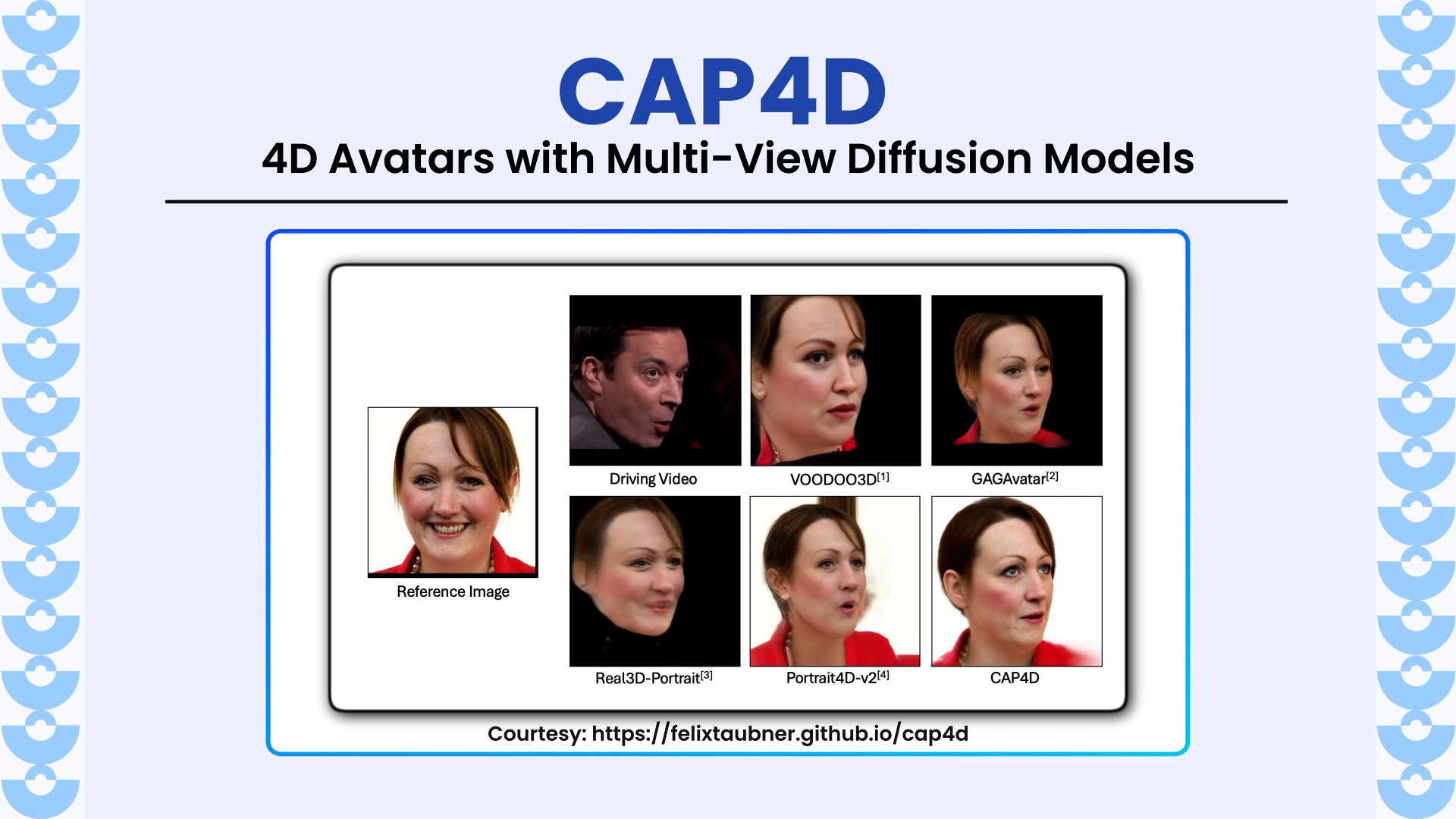

CAP4D introduces a unified framework for generating photorealistic and animate style rendering 4D portrait avatars from any number of reference images as well as even a single image. By combining

Test3R is a novel and simple test-time learning technique that significantly improves 3D reconstruction quality. Unlike traditional pairwise methods such as DUSt3R, which often suffer from geometric inconsistencies and poor generalization,

BlenderFusion is a novel framework that merges 3D graphics editing with diffusion models to enable precise, 3D-aware visual compositing. Unlike prior approaches that struggle with multi-object and camera disentanglement, BlenderFusion

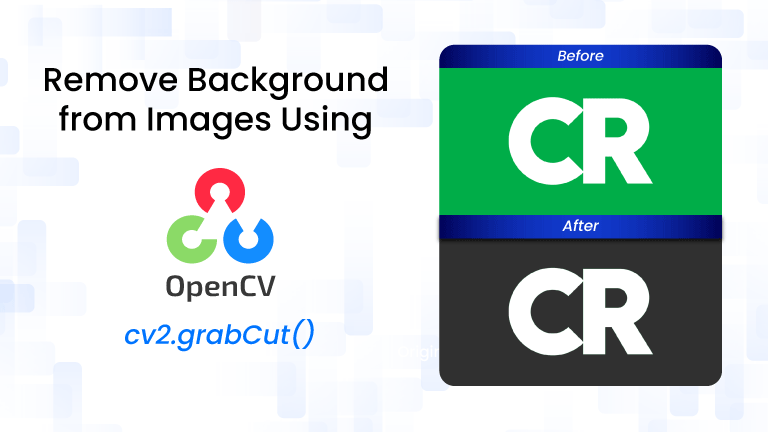

Ever wondered how those slick background removal tools actually work? You upload a photo, click a button, and boom, the subject pops while the clutter disappears. But behind that magic

The Google DeepMind team has unveiled its latest evolution in their family of open models – Gemma 3, and it’s a monumental leap forward. While the AI space is crowded

LongSplat is a new framework that achieves high-quality novel view synthesis from casually captured long videos, without requiring camera poses. It overcomes challenges like irregular motion, pose drift, and memory

DINOv3 is a next-generation vision foundation model trained purely with self-supervised learning. It introduces innovations that allow robust dense feature learning at scale with models reaching 7B parameters and achieves

Genie 3 is a general-purpose world model which, given just a text prompt, generates dynamic, interactive environments in real time and rendered at 720p, 24 fps, while maintaining consistency over